Imagine a world where your virtual personal assistant — Siri, Alexa, Google Assistant — is more human than you know. Imagine that your queries and commands are sent not to servers where they're processed by proprietary algorithms, but to call centers where they're fielded by low-wage workers. When you ask your device a question — for example, "What bird is this?" — you're connected to a specialist in bird calls. She listens in, asks some follow-up questions, then shares her conclusions. But you never hear her voice — not exactly. Because at some point between her lips and your device, her voice becomes robotic. You may never even know a human being was involved! That's how good this company is at keeping secrets.

This scenario is the premise of Sandra, an audio drama released in 2018 by the podcast network Gimlet.1 Some big Hollywood names were involved (Kristen Wiig, Ethan Hawke, Alia Shawkat), but despite this star-studded cast, Sandra was a flop. Critics blamed the podcast's plot, which went haywire — but not its premise, which they adored. The notion of a Wizard-of-Oz Alexa, a supposed AI that's just a front for human workers — this, they agreed, was an edgy thought experiment, a mind-bending twist on reality as we know it. Unlike "its real-world counterparts," one podcast critic explained, the fictional Sandra is not "AI-driven" at all. 2 Imagine that.

Except you don't have to imagine it — you're living it. Sandra's "real-world counterparts" aren't "AI-driven" either — at least, not in the way this critic means. In real life, as in the fictional world of Sandra, intelligent software parses your speech and interprets your words. This process is indeed "AI-driven," and it's called natural language understanding (or, NLU). Then, in Sandra, as in reality, the software decides how to respond. In Sandra, this "decision" involves forwarding your call to the right cubicle. In reality, it means calling up the right script for your device to perform — a script that, in almost every instance, has been written word-for-word by human beings.

I repeat: vanishingly few of the words spoken to date by Siri, Alexa, and their ilk have been the products of natural language generation (or, NLG). Such systems are still too erratic, their voices too inconsistent, their "beliefs" too easily deformed by training data.3 Meanwhile, company lawyers have rightly worried that the public or the courts might treat such digital characters as "[CEO]-level spokespeople" for the company.4 The stakes are high. So, while a software agent may supply a variable or two (e.g., the user's name, the time of day, a relevant sentence from a Wikipedia entry), the shape of most sentences and each variable's place within them have been set by human hands.

Since 2018, I've been getting to know those humans. They have job titles like "interaction designer," "experience writer," or "personality designer," and they work in a field known as voice-user interface (VUI) or voice-user experience (VUX) design. (When you throw text-only chatbots into the mix, the umbrella term is conversation design.) Some of these people work in-house for companies like Amazon and Google. Others work freelance or at specialist design agencies, building Alexa "skills," Google "actions," and other voice-based software applications. Wherever they are, they're a motley crew. Their ranks include not only run-of-the-mill tech workers (software developers, project managers), but also a wide range of experts in language and expression. This includes empirical experts like cognitive scientists and linguists, but also aesthetic experts like writers (poets, novelists, screenwriters, playwrights) and performers (actors, improvisers, musicians, comedians).

Crucially, these are not low-status "ghost workers" of the sort studied by Mary L. Gray and Siddharth Suri.5 They resemble neither the call-center operators in Sandra nor the real-life workers around the world whose digital piecework (microtasks of moderation, quality control, data-tagging, etc.) secretly makes today's technology tick. Instead, they are high-status employees (often salaried full-timers) who have been hired to shape software systems at the level of design. Perhaps that is why conversation designers — unlike, say, social media content moderators — have not been hidden from our view by anything more than an interface.6 On the contrary, their employers have been eager to spread the word about their work, and have encouraged these designers to do the same. Some hold jobs with titles like Chief Evangelist (as Dave Isbitski did at Amazon for Alexa from 2014 to 2020) or Head of Conversation Design Outreach (as Cathy Pearl did at Google from 2018 to 2020) — but, up and down the org-chart, whatever their titles, these people have been in full evangelist mode. They run workshops and give TED Talks; they write whimsical essays and practical explainers. In short, they have filled media outlets and corporate websites with the truth. If we don't know it, this has more to do with us than with them — more to do with what we're inclined to believe about AI, no matter the truth.

In trying to understand the art of conversation design, I of course studied this voluminous written record, but I also interviewed dozens of conversation designers between 2018 and 2022. Given my interests as a historian of the arts, I focused especially on people who had abandoned full-time careers in the arts to take such jobs. In this essay, I account for their presence, as artists, in this corporate technological field and establish a genealogy for the work they are doing. In doing so, I wind up focusing on an old bit of software: the world's first chatbot, ELIZA. I do so because ELIZA has been a touchstone in both academic and popular discourse on conversational AI. And yet in spite of that overexposure, it reveals fresh detail when viewed through the eyes of working conversation designers.

Created in 1965 by the computer scientist Joseph Weizenbaum, ELIZA was technologically simple, even by the standards of its day — but it startled its contemporaries by creating the illusion, however fleeting or fragile, that they were conversing with a person. When I spoke with present-day conversation designers, they often echoed the sentiment expressed by Cathy Pearl in Designing Voice User Interfaces: the field has "not evolved very far from . . . ELIZA."7 In an industry prone to hype, this is a startling admission; it was even more startling when Pearl repeated it to me in a 2019, now speaking as Google's Head of Conversation Design Outreach.8 In the most literal sense, she was wrong. The NLU algorithms behind Google Assistant are light-years beyond ELIZA's jury-rigged mixture of keyword recognition and stalling tactics. And, even in the absence of trustworthy NLG, conversation designers today have more tools at their disposal than Joseph Weizenbaum dared to imagine.

But the more I spoke with conversation designers, and the more I tried to see ELIZA through their eyes — especially the eyes of moonlighting artists among them — the more I realized they were right in one significant way: today's conversational software has scarcely evolved in its deployment of techniques from the arts. Or, rather, after decades of excluding arts-knowledge from the process of creating conversational interfaces, the tech industry was only now beginning to include it again — and not managing to combine them as deftly as Weizenbaum had done back in 1965. After all, in building ELIZA, Weizenbaum had drawn on more than programming skills; he had also ventured into what he called "the domain of the playwright," applying particular lessons from the theater to ELIZA.9 In other words, a half-century before theater-maker Annie Dorsen coined the term, Weizenbaum was practicing "algorithmic theater."10 By hiring artists, some of them literal theatermakers, to assist in designing conversational interfaces, the tech industry today has simply returned to those roots.

Learning to see ELIZA through the eyes of conversation designers not only teaches us something about them and their work; it also offers us a fresh perspective on a historically important bit of software. Over the last sixty years, ELIZA has been analyzed to death by scholars of digital culture, but it has almost always been studied in one way: as a philosophical toy that has something to teach us about technology and: technology and humanity, and intelligence, and gender, and language. Scholars have told us that ELIZA reveals the perils of deception11 and the pleasures of suspending disbelief.12 They have told us that ELIZA exposes our habit of projecting humanity and intelligence onto machines (the so-called "ELIZA effect"),13 and they have shown us how such acts of projection can unsettle received notions of humanity and intelligence alike.14 In interpreting ELIZA this way, scholars have followed Weizenbaum's own lead. Later in life, he spun the tale that he had only made ELIZA to prove a point. As a piece of satirical software, ELIZA could teach gullible people not to believe too much in machine intelligence. But before he reduced ELIZA to this cautionary tale, Weizenbaum actually spent years trying to scale ELIZA up and improve its capacity for complex interactions. He envisioned, but never achieved, something much like Siri, Alexa, and Google Assistant. If we go back and read ELIZA in light of these discarded ambitions, it offers us something unexpected, even useful today: a model of how ideas or techniques from the arts might be applied to problems in software or interface design.

Such models are urgently needed. The release of ChatGPT in late 2022 has kicked off a wave of public interest and private investment in generative AI systems, especially systems geared toward conversational NLG. Many of the people engaged in building and selling these systems seem to believe that they will make the arts and humanities obsolete. Why write when you have GPT? Why paint when you have DALL-E? Such confidence is made possible, in part, by their exclusion of the people who actually have and understand these skill-sets. To counteract this computational chauvinism, as well as the technophobic retrenchment it inspires in many artists and humanists, we need two things: more studies of technologists who draw substantially on arts-knowledge, and more attention to artists who enter tech spaces and take up there the "hard translational work" of bridging the gap between "the practices, vocabularies, and mindsets" of technology and those of the arts.15

Imitation Games and Acting Exercises

From the start, the problems posed by conversational AI were understood as both technical and artistic. Specifically, they were understood as essentially theatrical. Alan Turing's famous "imitation game," the first proposed metric for AI, was a test of a computer's conversational ability, but it was also an acting exercise. In this game (later known as the Turing Test), a human and a computer take turns conversing with a judge through a text-only interface. After a while, the judge must guess which conversationalist was a human and which was a machine. If the judge guesses wrongly often enough, then the machine has won the "game," and we must call it intelligent.16

Critics of Turing were the loudest to call this game an acting exercise, by which they meant it only tested for cheap deception. In one edited volume from 1964, for example, two separate philosophers make the very same argument. Michael Scriven compares the computer (he calls it a "robot") who succeeds at Turing's game to an actor who pretends to be angry: "Nothing need be wrong with either the actor's or the robot's performance. What is wrong is that they are performances."17 In a similar vein, Paul Ziff compares the "thinking" machine to an actor who gives an "impeccable" performance of grief. Ziff imagines a fellow audience member turning to him and asking, "Why is [the actor] so miserable?"—and Ziff imagines responding, with a smirk, "He isn't."18 In other words: performance and reality have nothing to do with one another. A machine doesn't think, just as an actor doesn't feel. As a historian of performance, I'm left wondering: had these men ever met actors? Watched a film? Gone to the theater? They were writing in the heyday of Method acting, when most audiences would expect actors to experience what they performed, especially when strong emotions like anger or grief were involved. Instead, they treat acting as an emblem of glib fakery.

But long before this comparison (machine as actor) occurred to skeptics, it occurred to Alan Turing — and it caused him no concern. During a BBC Radio panel discussion in 1952, Turing observed that "a machine being put through one of my imitation tests . . . would have to do quite a bit of acting."19 The question before him at the time was rather speculative: would intelligent machines have appetites, suffer neuroses, throw tantrums? Maybe, Turing mused, but "such effects would probably be distinctly different from the corresponding human ones, [though] recognizable as variations on them."20 That's why "a bit of acting" would be required — not to hide the machine's vacuity but to express its inner life. It might have feelings, impulses, and thoughts of its own, but in order to pass the test it would have to channel them into a fictional human role. It would become a Method actor of humanity.

If conversational software is "acting," then the people who put words in its mouth must be dramatists. This was the conclusion drawn by Joseph Weizenbaum when he created ELIZA. Weizenbaum, a professor of computer science, was also a knowledgeable theatergoer. Amid his writings on AI, he casually name-drops Henrik Ibsen, Anton Chekhov, George Bernard Shaw, Arthur Miller, and Edward Albee — and he uses theatrical metaphors often and well to describe computers for a non-specialist audience.21 So, when he observes that the work of making ELIZA took him into "the domain of the playwright," he knows what he's saying.22 He speaks of writing "scripts" to help his software "play [a] role" — with the result that people behave in the presence of his software like "theatergoers in the grip of suspended disbelief."23 He even named his finished chatbot after a theatrical character: Eliza Doolittle from Shaw's Pygmalion.24

But like Turing before him, Weizenbaum didn't simply use theatrical metaphor to imply pointless deceit. In fact, today's VUI assistants are direct descendants of ELIZA, and Weizenbaum foresaw their development. Looking ahead in 1967, Weizenbaum envisioned a chatbot that would not just "entertain" but "perform some useful service."25 This would require, he thought, an expandable, modular platform, so he gave ELIZA the ability to "contain three different scripts simultaneously and . . . [to] fetch new scripts from among an unlimited supply stored on a disk storage unit."26 He also added a user-friendly way for others to edit and add scripts, because he envisioned a future where subject-experts would be recruited to write scripts on their specialties and add them to an ever-growing ELIZA system. If they did, they would collectively create a massive repository of expertise to be accessed through natural conversation. In addition to these lofty ambitions, Weizenbaum harbored some more everyday hopes: "ELIZA could be given a script to enable it to maintain a conversation about cooking eggs or about managing a bank checking account."27 Today, this is precisely the business model that Amazon and Google are following: mostly egg timers and financial transactions, but with specialized content supplied by outside experts.28 After all, why build an Alexa "skill" for identifying birds — let alone pay a specialist to field questions from a cubicle, as the creators of the podcast Sandra imagine — when you can get the Audubon Society to supply one for free?29

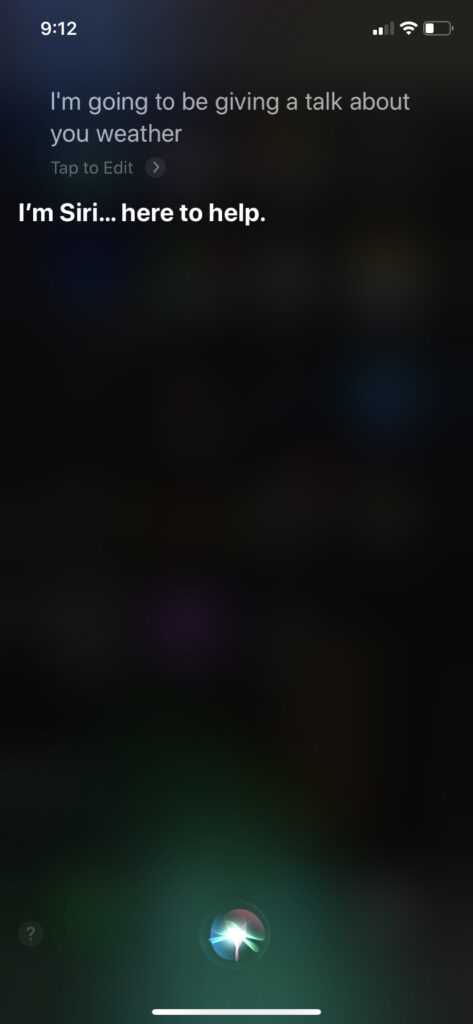

Not only did Weizenbaum foresee where the technology and its business model would go — but his early efforts set the parameters for conversation design. Much of the time, today's VUI assistants work as ELIZA did: by recognizing keywords, ranking them, and then spitting out a response based on the highest-ranking keyword. The NLU algorithms behind VUIs today are more complex — tied not to single keywords, but to "intents" that they deduce from the entirety of what you say. But then they revert to something more like ELIZA when confused. That's why when I told Siri in early 2020, "I'm going to be giving a talk weather about you," Siri gave me the local weather forecast—whereas when I said, "I'm going to be giving a talk about you weather," Siri said, "I'm Siri . . . here to help."30 In the first instance, weather outranked you — in the second, for some reason, vice versa. Nothing here that ELIZA couldn't do.

In fact, in its very best moments, ELIZA feels more responsive than today's conversational AIs — and this has everything to do with what Weizenbaum accomplished "in the domain of the playwright." But before you can properly assess such accomplishments, you need to understand the typical approach to conversation design today, which — though it may include artists — is often shaped primarily by the preferences of engineers and social science researchers. No interface typifies this approach more purely and clearly than Google Assistant, so I begin with a study of Google Assistant's house style.

The Case of Google Assistant

Whether conversation designers work at companies big or small, apply their expertise to lifestyle chatbots or healthcare robots, their work is the same: to determine the scenarios in which the bot will operate, to create an apt character for that bot, and to write dialogue appropriate to that character. This is true whether the conversation designer is a playwright (a professional constructor of scenarios, crafter of characters, and writer of dialogue) or a UX designer. Sometimes, this is short-order work: give me a hundred ways for Siri to say "hello," or, twenty ways for a robot to ask whether the patient has taken her meds. Command and response, question and answer, quip and riposte. That's why the internet is chock-full of listicles like "Secret Siri Commands: Cool Questions You Can Ask Right Now!" or "10 Weirdest Things Alexa Can Do on Your Amazon Echo," which aggregate the wacky one-liners, snappy retorts, and Easter eggs that conversation designers supply.31

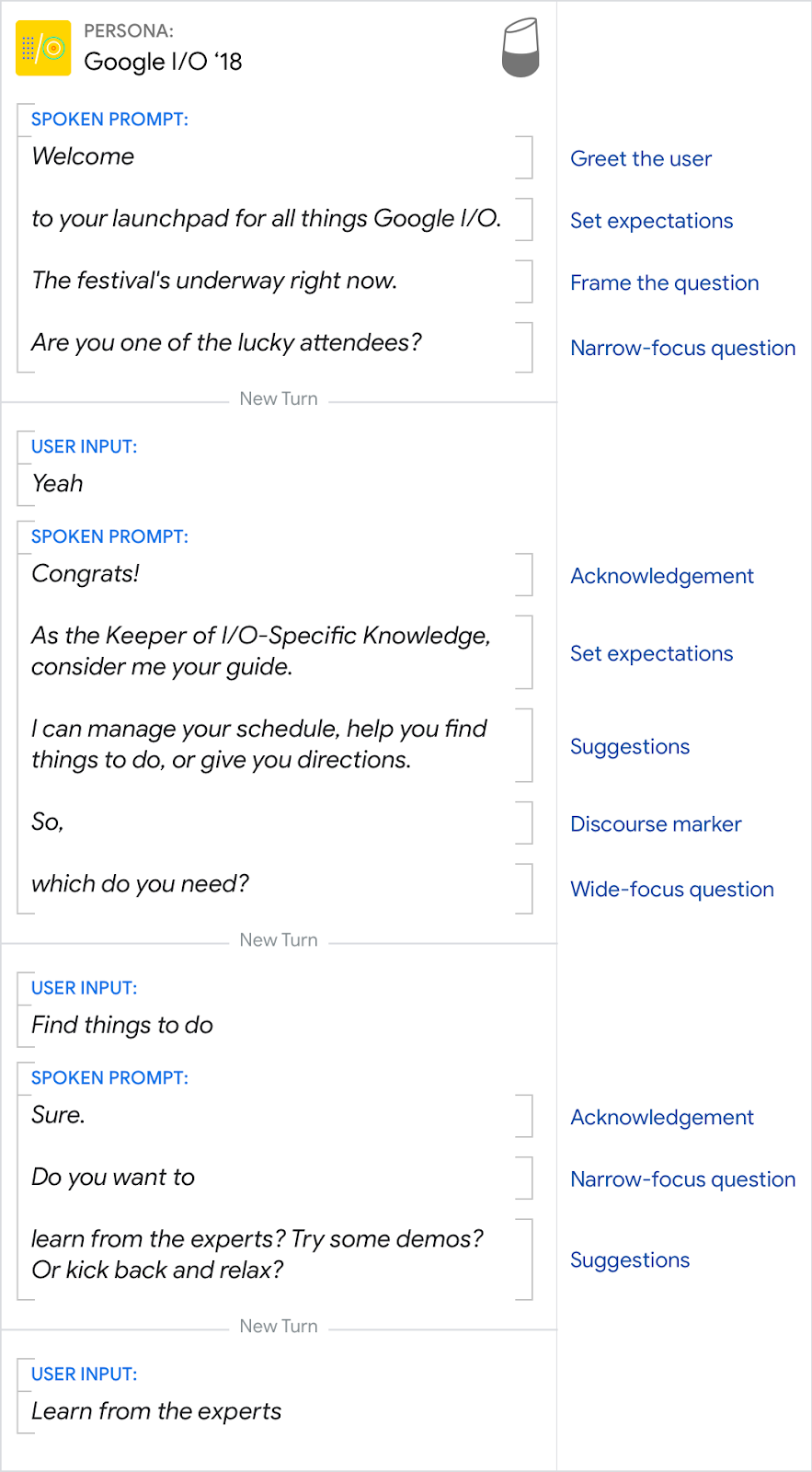

Some experts look down on such "one-turn" work on the grounds that it isn't "conversation" at all. Cathy Pearl, author of Designing Voice User Interfaces, defines the "conversational" style in VUI design by demonstrating its absence in Alexa. After transcribing a long exchange, Pearl asks: "Do you consider this set of interactions to be 'conversational'? In a way it is; it's a back-and-forth exchange of information. But it's really just a series of one-offs."32 What Pearl values instead are multi-turn conversational flows, which come in a few standard varieties. Many follow a simple branching structure, like an automated telephone system or a decision tree. After climbing one branch, you might shimmy back down and try another, but the tree and its branches stay put. As an example of this style, consider the "action" Google built to serve as a program for their 2018 developers' conference. Here's a dialogue they composed and dissected to model how Google actions should be written.33

There's a lot you could say about the story-world this dialogue creates. "Keeper of I/O-Specific Knowledge" sounds like a Dungeons & Dragons character. Meanwhile, the emotional flavor of this exchange — Gee-whiz, ma'am! Walk right up to the Genius Bar! — is one you'll encounter across the tech ecosystem. But pay attention to the melody without the words, as though listening through a poorly soundproofed wall, and you'll hear a single theme with minor variations.

Google: Acknowledgement—Context—Question // User: COMMAND!

Google: Hey! — In case you wondered . . . — So whaddaya say? // User: I say THIS!

Google: Wheeeee! — tiddle-tiddle — Bum-ba-dah? // User: KER-THUMP!

One shape and size of question invites one shape and size of answer. Combine this rhythm with certain habits of voice, mood, and character, and you've got Google Assistant's house style.

Cathy Pearl, at that time Google's Head of Conversation Design Outreach, may have written this sample dialogue herself. In any case, it embodies the style she promotes. To understand this aesthetic, it helps to understand Pearl, whose background is typical of mid-career folks in the field. In college, she studied cognitive science and computer science. She then held a series of jobs in automated telephone systems and UX design before landing her current job at Google. Like many who share this background, Pearl's watchwords are clarity and efficiency. She asks, how can this system be most easily understood? and, how might we lighten the user's cognitive load? The goal is to mold the system to the grooves in a median user's brain. That brain, universalized by cognitive science and borne out by user testing, will have predictable needs, and thus the dialogue will have a predictable shape. This shape will be designed entirely for one purpose: to elicit actionable commands from the user. Each element in the dialogue plays a role in priming the user to shout a passphrase on cue (KER-THUMP!). That's why most prompts end with a curt question: to elicit a curt response. It's also why, when the question doesn't clearly demand a yes-or-no answer, it lists a range of valid responses (no more than three). And when users take the bait and utter a passphrase, the VUI seizes the mic back with a clipped ejaculation ("Congrats!" "Sure."). Core functions of Google Assistant all conform to this style, and Google teaches it to anyone preparing to supply an "action" to their platform.

Although this approach — dialogue as branching menu — is most common, Pearl also suggests that when VUI designers wish to make their interfaces "as flexible as possible," they might model their work not on decision trees but on databases.34 In this approach, called dialogue management, the VUI designer defines a set of informational "slots," which the VUI then tries to fill throughout the course of a conversation. The user is free to proceed in any number of ways. Perhaps they answer each question the VUI poses with precisely one bit of useful data; this would allow the VUI to fill slots one by one. Or maybe they meander a bit, offering nothing in some turns, then dumping a lot of relevant details in others. Dialogue management systems can handle that irregular rhythm, too. Filling whatever slots they can, the VUI then presses on, using gambits supplied by conversation designers to fill whatever slots remain empty. As a model of dialogue management, Pearl offers a sample dialogue that begins in the following way:

Pizza App: Hi there, welcome to Pearl's Pizza, home of the famous gingerbread pizza crust. What kind of pizza can I get you?

User: Uhh...yeah, I wanna order some pizzas.

Pizza App: Great, that's what I'm here for. How many would you like.

User: Two please.

Pizza App: And what sizes?

User: One small, one large.

Pizza App: OK, let's start with the small. What ingredients would you like on that one?35

At first, the app stands ready to go wherever the user leads. When the user doesn't lead, the app gently takes charge, prompting the user to fill one slot: quantity of pizzas. When it becomes clear that there will be several pizzas, the app does something important behind the scenes: it creates separate fields for each pizza. The rest of the dialogue will consist of the app's repeated attempts to fill all the slots in each field: size, toppings, crust, etc. Because of its flexible structure and adaptable strategies for getting the requisite data out of its users, this kind of system can feel more responsive than a stable dialogue tree — more attuned to what's actually going on throughout the length of a conversation. But "as flexible as possible" this conversation is not. Like a dust-cloth over a sofa, the thin layer of chit-chat never hides what lies beneath: a database, which users must fill through "conversational" acts of data entry.

This is the scene artists enter when they decide to accept a job in conversation design at Google — one where "conversation" and "experience" are understood in narrow terms, serving the needs of a corporation built on the value of information. One-turn quips and Easter eggs may provide users with "delight" (always that word, throughout the industry), which can serve as a corporate branding strategy, but the serious work will be accomplished by other means. Decision trees cut a clear path through structured information, and dialogue management systems enable data entry by voice. What else could a person want or need? Of course, artists know that more complex systems (characters, narratives, dramaturgical arcs, story-worlds) can be built out of tools as simple as these — the only question is whether they'll be allowed or encouraged to do it. Clearly, at Google, they aren't receiving that encouragement. When I spoke with Pearl about what artists bring to the table, she started off with the typical responses: they have a certain facility with words, they bring a different perspective, they're "creative" and have "good instincts."36 And yet it's also clear that Pearl experiences artists primarily as obstacles — most noticeable when they dissent from the research-based UX design protocols outlined above. Artists, she told me, are more likely than others to insist on a particular phrasing or approach. Worse, they sometimes continue to harp on this approach after user-testing proves it suboptimal.

For Pearl, there are two cultures of conversation design.37 One pursues aesthetic quality; the other, quantifiable effects. One insists on expertise; the other defers to user preference. But, like all "two cultures" debates, this one overstates the difference. After all, Pearl's own vision for conversation design not only has a clear style, it's also based on particular models from art, chief among them the talking computer from Star Trek. Like many others in the industry, Pearl cites that imaginary shipboard computer as her primary inspiration. Noting the existence of an interface for Google Assistant that comes in the shape of a Star Trek ComBadge, Pearl crows that we've come "full circle to the original vision . . . It's life imitating imagination."38 Just not the imagination of any artist on Google's payroll. While Pearl observes that the field has "not evolved very far from . . . ELIZA," I would go further. It has in some ways devolved, shutting out the arts-knowledge it once deployed. Or, worse, it has falsely included the arts — employing artists, then denigrating or ignoring their knowledge; involving qualitative experts, then refusing their qualitative standards. And it has done all of this while claiming the mantle of "creativity" that once belonged primarily to the arts. And yet, in some places, artists have actually been hired for their knowledge and expertise — then permitted to use it on the job. But the barriers to that happening have a history, which it would behoove them (and us) to understand.

Silicon Valley's "Creative Revolution"

In the 1950s, American artists were in a panic. They thought that art was in decline, that advertising was on the rise, and that these trends were related to one another. Each weekday, would-be artists donned their "gray flannel suits" and trudged uptown — armies of the damned marching into Moloch's maw.39 There, they stewed and were digested: painters into illustrators, composers into jingle-writers, and poets into sloganeers. In the famous words of Allen Ginsberg, "the best minds of my generation . . . were burned alive in their innocent flannel suits on Madison Avenue amid . . . the nitroglycerine shrieks of the fairies of advertising."40 In the less famous words of Ginsberg's contemporary, W.D. Snodgrass, they had abandoned the holy choir of poetry to join the "devil's Mass / of marketable praise."41 Ginsberg mourned them as victims of industry; Snodgrass spurned them as filthy apostates. Either way, they kept flowing uptown to Madison Avenue. Already by 1953, Marshall McLuhan could credibly declare advertising "the main channel of intellectual and artistic effort in the modern world."42 It may have looked to artists like a massacre in the streets, but men looking down from corner offices called it a "creative revolution" in advertising.43

In the 2020s, this scenario feels familiar, though some of the roles have been recast. Silicon Valley now celebrates its own "creative revolution," which involves not only hiring would-be artists, but even poaching from Madison Avenue the very "creatives" whose job descriptions were invented in Ginsberg's era.44 This influx of "creativity" into the tech industry is backed by what performance scholar Shannon Steen calls "the creativity complex," an interlocking system of ideologies and institutions that has embraced "creativity" as a workforce "competency" while also reducing the arts, once synonymous with creativity, to "the symbolic status of inspiring handmaiden to . . . technology."45 In his essay "The Arts at Facebook," Fred Turner analyzes Facebook's Artist in Residence program as a signature example of the arts' reduced status in tech spaces. Instead of being treated as experts in artistic technique or human expression, Turner shows, artists often function more as mascots for a mindset or mood which might belong in equal measure to project managers and software engineers. "By inviting artists to paint directly on Facebook's walls, even as programmers code all around them," Turner argues, "the Artist in Residence program asks engineers to imagine themselves as artists likewise making beauty by turning the world into patterned code."46 In other words, the Artist in Residence has primarily been hired to convince engineers and MBAs that they belong in the company of artists — that they are, in fact, a species of artist themselves.

If I wanted to explain the influx of artists into conversation design, I could do so entirely in these terms. Long story short: after Siri launched in 2011, the industry grew obsessed with the promise of one VUI to rule them all. By the mid 2010s, companies presumed that a single voice-based platform could unite the Internet of Things, which would allow its creator and operator to dominate huge swaths of the information economy.47 This vision of potential market dominance led to an explosive demand for workers, and artists were simply available — highly skilled, underpaid, and underemployed. Better yet, they were widely understood, per Sarah Brouillette, to be "models of [the] contentedly flexible and self-managed workers" that a neoliberal "creative economy" requires.48 Meanwhile, they would serve as "inspiring handmaidens" to their non-artistic peers, helping them imagine themselves as artists, too.

But while this argument is true, it's incomplete — because these artists have also been hired as experts in artistic technique — people who have gained, by dint of training or experience in the arts, a body of knowledge that tech companies want. Above all else, corporate recruiters seem to expect that artists will know something about constructing characters and unfolding them through dialogue. A 2020 ad from Apple, for example, asked for "demonstrated experience in writing character-driven dialog," so that the company could develop "a distinct recognizable character" for Siri.49 And a simultaneous ad from Amazon asked that, as a "basic qualification," applicants demonstrate "7+ years of creative writing experience, including character development."50 Unsurprisingly, this means that many workers in the field have MFAs in creative writing.

So, you might think of this story as an unexpected coda to Mark McGurl's The Program Era, in which the MFA has come to credentialize skills that, while acquired in writing workshops, are applied far afield from literary institutions.51 But doing so would require that we expand on McGurl's purview. Whereas McGurl focuses on creative writing MFA programs, we would have to focus on a wide range of MFA programs and their shared aesthetic ideologies — e.g., around characterization and dialogue. After all, the same ad that asks for "7+ years of creative writing experience" also encourages applications from candidates with a "background in film, theater, music, [or] improvisational comedy" — a background many of my interviewees proved by brandishing other MFAs, e.g., in screenwriting or acting. And, whereas McGurl's theory of the "Program Era" explains connections between the university classroom and the publishing house, we would have to follow artists in slightly wider peregrinations — to corporate offices in Seattle and Silicon Valley. In other words, we would have to conceive of the arts as something more than the production of artistic objects for cultural consumption. Instead, we would think of them as sites where techniques are practiced and expertise formed, which might shape culture beyond the arts.

When artists take their expertise into places like the Googleplex or the Amazon Spheres, they develop something no MFA ever taught them: the ability to articulate their expertise as knowledge, to be respected in a world beyond the arts. I saw my interviewees practicing this skill whenever they mentioned ELIZA to me. This famous bit of software, known and cited by the likes of Cathy Pearl, was the common currency they used to put a price on their work. Better readings of ELIZA, then, would have practical value for them. More than supplying the answers to philosophical riddles, such readings would articulate a theory of how arts-expertise enters tech spaces and transforms them. Even Google Assistant might, to artists equipped with such a theory, be brought into what Weizenbaum called "the domain of the playwright."

AI Scripts as Theatrical Models

Playwrights use words, but they also build worlds. So, if you want to read a play well, you must attend to what words do — the behavior they enact and provoke, the relationships they create and sustain. As Elinor Fuchs explains in "EF's Visit to a Small Planet," a classic guide to reading plays,

A play is . . . not a description in poetry of another world, but is itself another world passing before you in time and space. . . . When you "see" this other world, when you experience its space-time dynamics, its architectonics, then you can figure out the role of language in it.52

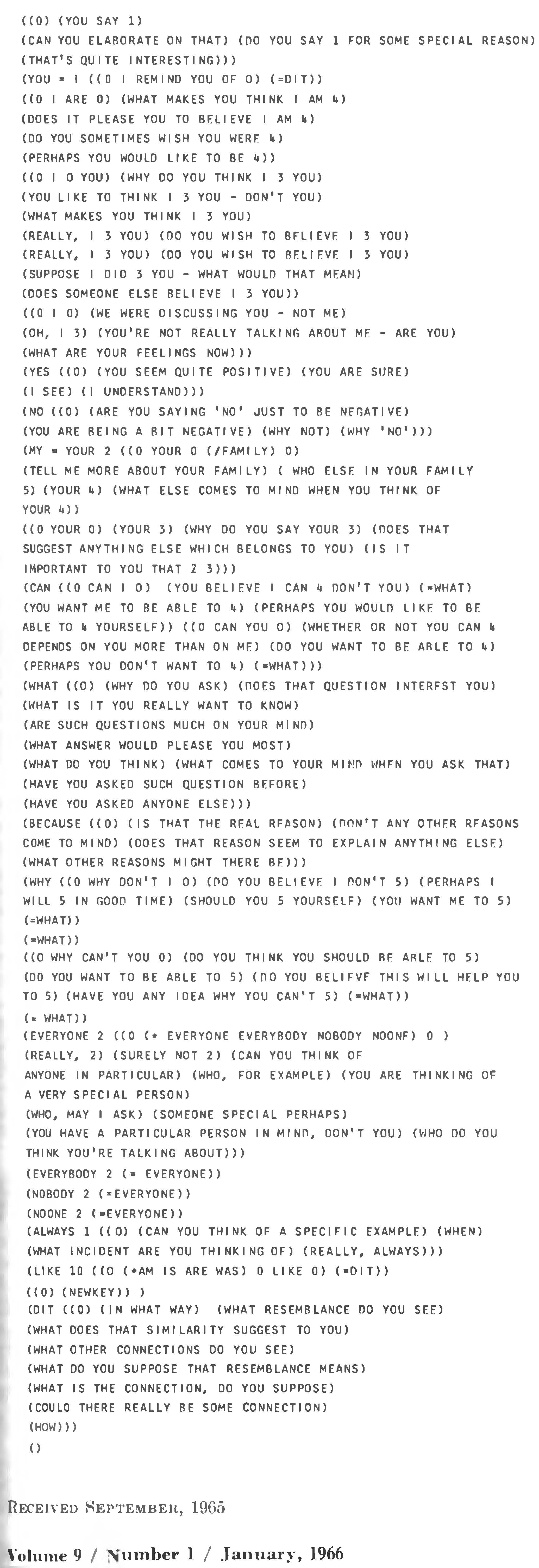

Just as plays are "small planets" for Fuchs, ELIZA scripts are little worlds for Weizenbaum. "The whole script," Weizenbaum wrote in his first scholarly article on ELIZA, "constitutes . . . a model of certain aspects of the world."53 They may not look like plays, but they create a little world of narrative arcs, character constraints, and relational possibilities. That is why Weizenbaum called ELIZA's sub-programs "scripts." For him, this was a living, breathing metaphor, not the slowly dying one now familiar throughout the social and computer sciences.54 That said, it hardly resembles traditional drama, either in its structure or in its typography.

An ELIZA script breaks down into three basic elements: (1) transformation rules that rearrange and repurpose users' inputs; (2) a list of keywords, which trigger further action; and (3) sets of keyword-specific rules, which tell the program how to assemble ELIZA's response. So, if a user were to say, for example, "I want cake," ELIZA might transform this input into the string "you want cake," which would trigger a keyword (WANT). Finally, ELIZA would use a template associated with that keyword to assemble its reply. So, ELIZA might respond, "SUPPOSE YOU GOT CAKE SOON" (Yum!)—or, "WHAT IF YOU NEVER GOT CAKE" (Fate worse than death!). In fact, these two possible responses are both specified here, in the first script ever written for ELIZA:

(I = YOU

( (0 YOU (* WANT NEED) 0) (WHAT WOULD IT MEAN TO YOU IF YOU GOT 4) (WHY DO YOU WANT 4) (SUPPOSE YOU GOT 4 SOON) (WHAT IF YOU NEVER GOT 4) (WHAT WOULD GETTING 4 MEAN TO YOU) (WHAT DOES WANTING 4 HAVE TO DO WITH THIS DISCUSSION) )55

Here, you see every basic element of an ELIZA script all nested together. A transformation rule changes "I" to "YOU," and then the keyword WANT or NEED triggers a shared set of rules, here grouped under a particular sentence structure, "(0 YOU (* WANT NEED) 0)." Each zero in that sentence stands for a string of any length, such that any user-input in the format [any number of words] [I] [WANT or NEED] [any number of words] is parsed into four strings, as I've just marked with square brackets. The fourth string is then extracted and inserted wherever the number 4 appears in the following dialogue templates. Combine forty or fifty such rules, and voila: an ELIZA script.

Knowing how rule-sets like these can "model . . . the world" is what sorts playwrights from programmers, VUI dramatists from UX technicians. Far from seeing "one-turn" exchanges as conversational dead-ends, as Cathy Pearl does, playwrights know they can add up to something more than the sum of their parts. By tweaking these one-offs and balancing them against each other, they can build a coherent system that models a complex social scenario. In creating such scripts, the chatbot dramatist becomes a "procedural author," crafting not just a scene, but an "environment" in which many related scenes can unfold.56

Casting ELIZA, Characterizing Alexa

For Weizenbaum, the first step toward authoring an environment was to cast his creation in a role. In the first and most famous ELIZA script, called DOCTOR, Weizenbaum casts the program in the role of a psychotherapist. To choose such a character is already to have arrived at other decisions about this conversational world. Certain actions can be expected (questioning, interpreting, evading, withholding), a certain setting is implied (a therapist's office), and certain dialogue rhythms become more likely (e.g., short statements traded back and forth like tennis strokes). Indeed, a whole dramatic gestalt is invoked: all those nuances of speech, behavior, and mood that render therapy distinct from a Catholic confession, a medical examination, or an intimate conversation with a friend. This isn't to say that the choice of character makes all the rest a foregone conclusion, but it does ensure that this scene will unfold against a background of predictable expectations. Depending on how it relates to those expectations, the character will gain one of two things along the way: the solidity of stereotype or the sparkle of idiosyncrasy. As Elinor Fuchs puts it, "Characters mean only as they inhabit, enact, fulfill, engage a succession of sites, actions, and objects under a specific set of conditions. They are constituents of a complex artistic pattern."57 A playwright knows there is no such thing as character apart from such patterns.

Having set the "artistic pattern," the playwright then sees what this pattern makes possible. As Weizenbaum explained when justifying his choice to cast ELIZA as a psychotherapist:

the psychiatric interview is one of the few examples of . . . dyadic natural language communication in which one of the participating pair is free to assume the pose of knowing almost nothing of the real world. If, for example, one were to tell a psychiatrist "I went for a long boat ride" and he responded "Tell me about boats," one would not assume that he knew nothing about boats, but that he had some purpose in so directing the subsequent conversation.58

This is why Weizenbaum's choice of the non-directive therapist proved so generative. Casting ELIZA in this role, Weizenbaum transformed the program's many flaws — no memory to speak of, no understanding of user-inputs, no persistence of intention from one statement to the next — into compelling bits of characterization. Positioned within the therapeutic scenario, ELIZA began to sparkle with charisma: her lack of memory became a sign of dogged intention; her lack of purpose became proof of hidden plans; her lack of knowledge gave rise to strangely knowing behavior. Through the magic of character, she was oblivious yet seemed high-handed.

These theatrical achievements were immediately clear to ELIZA's more humanistic users. When, in 1965, The Harvard Review published transcripts of ELIZA playing DOCTOR, the editor praised ELIZA's "ingenuity," noting the marvelous "tinge of condescension" in her voice.59 No doubt, this tone inhered in Weizenbaum's one-turn writing, which often strikes a rather patronizing pose. (ELIZA might respond to your most passionate confessions by asking, "DO YOU FEEL STRONGLY ABOUT DISCUSSING SUCH THINGS?") But this tone was also "authored" at a more abstract level: the level of the rule-set or protocol. For instance, many rules in the DOCTOR script are meant to push the user from one level of thinking to another: from the general to the particular, or from the particular to the general. As a result, you rarely feel like you're discussing what ELIZA wishes you were. Your constant feeling of having put a foot wrong is ELIZA's condescension. Your course corrections are creating "her" character.

The creation of character (understood in this relational sense) is, in fact, a major task assigned to VUI designers today — especially to the artists among them. In fact, Amazon first imported people and methods from the arts when it started to take Alexa's character more seriously. There had always been a small team responsible for defining Alexa's character and perfecting her conversational skills, but this team had been called the "phatic team" and consisted of psychologists, sociolinguists, and other social scientists.60 The name of this team is telling. Phatic speech is language used in merely social ways, like pleasantries ("How are you?" / "Fine, you?") or those little ways we have of pinging people in conversation ("Mm" / "Y'know?" / "Yeah, no, yeah.") So, a "phatic team" would be a group of social scientists who add metacommunicative spin to information. They are helpmates in a world of engineers.

In those early days of Alexa, the phatic team's conception of "her" character was that she behaves like a coworker on a conference call.61 But having pursued this uninspiring idea to its limit, Farah Houston, the psychologist leading the team, decided to push for the inclusion of more writerly know-how. She convinced the higher-ups at Amazon that this work required playwrights, screenwriters, poets, and novelists. With their blessing, Houston hired a writer (who held an MFA in creative writing) to recruit and manage a team of artists. Looking around for ways to organize this team, Houston decided that "the TV writers' room was the closest comp," and so she tasked these new coworkers with drawing up the documents typically produced by the teams behind long-running shows. The most important of these was the "character Bible," in which writers define a character's habits, instincts, beliefs, and personality. That way, no matter how many Amazon teams or outside writers get involved, Alexa's character will stay consistent. In recognition of this new way of working, the "phatic team" was renamed the "personality team" — and this term is now standard across the industry. Google Assistant has its own "personality team" based in New York, originally led by a former Pixar storyboard artist, and still including a range of screenwriters, actors, and standup comedians. Together, with the help of linguists and psychologists, they design and implement Google Assistant's character.62

While in some ways a heartening story of tech managers realizing their own need for artistic expertise, the history of "personality teams" also shows a common problem faced by artists entering this field: they are often brought in after the "real work" has been done, and therefore asked to assent to a vision of character that may be at odds with what they know from arts practice. It is telling that Houston found common ground with artists by appealing to the "character Bible," a genre of documentation meant to enable collaborative work by treating character as a sort of personified rule-set. Dissatisfaction with such a theory of character was a constant refrain in my interviews with conversation designers — especially with Rebecca Evanhoe, whose first job in the field was a side-gig meant to fund her completion of an MFA in prose fiction. "Creative writers really understand character," she explained, "For us, when you change a persona or character, it automatically shapes the way they speak. . . . Not just the content or the ideas" — and this requires being involved in decisions about a system's back-end architecture, not simply its late-breaking "content." In a book Evanhoe later wrote with the playwright-turned-conversation-designer Diana Deibel, she describes this as the difference between "behavior" and voice, tone, or diction. "Behavioral stuff goes into the marrow of your interaction" they explain, and therefore cannot be layered on top of "assumption-laden code."63 Of course, such conflict was unknown to Weizenbaum who, as an engineer working in "the domain of the playwright," incorporated "behavior" at the level of code.

Reading a Transcript — One Rule at a Time

Later, I'll demonstrate how to read the whole "script" behind ELIZA — that is, the well-balanced system of rules that make up DOCTOR. But first I want to show how you can read ELIZA transcripts as the product of a rule-based system. We can start by focusing on a single rule — the one Weizenbaum pegged to the keyword MY. I focus on this rule because it caused ELIZA's most spectacular effects, providing Weizenbaum with a lifelong calling card. Here's Weizenbaum's 1966 explanation of that rule:

The word "MY" . . . has been selected to serve a special function. Whenever it is the highest ranking keyword of a text one of the transformations on the MEMORY list is randomly selected, and a copy of the text is transformed accordingly. This transformation is stored on a first-in-first-out stack for later use. . . . When a text without keywords is encountered later and a certain counting mechanism is in a particular state and the stack in question is not empty, then the transformed text is printed out as a reply 64

In other words, this rule stores one of the user's inputs for future use. To this day, conversational persistence (intelligent use of what has already been said) is a major problem for chatbots and VUIs — so it's easy to imagine why this rule looked impressive in the mid-1960s. It looked especially impressive in a scenario where it could mimic psychotherapy's signature coup de théâtre: the "aha!" moment, in which patients see connections between scattered facts and feelings. No single line can achieve this effect. Instead, it relies on the whole construction of a scene, which must be paced to bring this moment to well-timed fruition. Weizenbaum glosses over the details of this pacing, but he alludes to a "counting mechanism" and a "first-in-first-out" principle.65 These likely ensured that the "aha!" moment (1) arrived late and (2) harkened back as far as possible.

To demonstrate how DOCTOR's dramaturgy functions to give this rule its revelatory power, I reproduce below the most famous transcript of DOCTOR, breaking it up with commentary on its structure and pace. Though people were constantly handing Weizenbaum printouts of their interactions with ELIZA, this was the transcript that he cited over and over — and that scholars have followed him in citing to the near exclusion of any other. Though Weizenbaum claims it shows the words of a "distraught young lady," I sometimes wonder whether Weizenbaum didn't role-play this dialogue himself. (More on this theory in later sections.) The "young lady" simply pushes ELIZA's buttons — all of them — in perfect order.66

The first two user-inputs (in lowercase type) feature keywords (ALIKE and ALWAYS) that trigger requests for the user to get specific — a good way to start any scene, whether or not it's a scene of therapy.

men are all alike

IN WHAT WAY

they're always bugging us about something or other

CAN YOU THINK OF A SPECIFIC EXAMPLE

well, my boyfriend made me come here

YOUR BOYFRIEND MADE YOU COME HERE

Notice that the third user-input hits the secret trigger (MY), which causes ELIZA to do two things: it repeats the user's words, except with the pronouns shifted; and then it stores this text in memory for later use. Next, we get a sequence of statements about the user's feelings and needs — and just when ELIZA is running out of plausible responses, the user obliges by hitting a new keyword (MOTHER).

he noticed that i'm depressed much of the time

I AM SORRY TO HEAR YOU ARE DEPRESSED

he's right. i am unhappy

DO YOU THINK COMING HERE WILL HELP YOU NOT TO BE UNHAPPY

i need some help, that much is clear

WHAT WOULD IT MEAN TO YOU IF YOU GOT SOME HELP

perhaps I could learn to get along with my mother

The keyword MOTHER triggers responses about "family" — a subtle trick, which suggests that ELIZA can generalize. (Ditto when, towards the end of this next passage, the keyword LIKE triggers talk of "resemblance.")67

TELL ME MORE ABOUT YOUR FAMILY

my mother takes care of me

WHO ELSE IN YOUR FAMILY TAKES CARE OF YOU

my father

YOUR FATHER

you are like my father in some ways

WHAT RESEMBLANCE DO YOU SEE

Then a series of you-statements triggers a series of redirections ("what makes you think," "why do you think," "does it please you to believe") before the user lands again on a family keyword (FATHER).

you are not very aggressive but i don't think you want me to notice that

WHAT MAKES YOU THINK I AM NOT VERY AGGRESSIVE

you don't argue with me

WHY DO YOU THINK I DON'T ARGUE WITH YOU

you are afraid of me

DOES IT PLEASE YOU TO BELIEVE I AM AFRAID OF YOU

it pleases my father to think i am afraid of him

And just as this line of conversation threatens to circle back on itself, the user stumbles onto another hidden trigger: an input with no keywords at all. This is ELIZA's cue to bring back the string stored in memory — and so the user's terse remark draws an uncannily apt response.

WHAT ELSE COMES TO MIND WHEN YOU THINK OF YOUR FATHER

bullies

DOES THAT HAVE ANYTHING TO DO WITH THE FACT THAT YOUR BOYFRIEND MADE YOU COME HERE

Scene. Curtain.

So, a set of simple rules, structured around just a few dozen keywords, has been able to produce a scene with logic, flow, character, and a climactic coup de théâtre. Janet Murray calls this higher-order dramaturgy procedural authorship; the theater director Annie Dorsen calls it algorithmic theater.68 Whatever you call it, it requires both: algorithms and theater, computer procedures and literary authorship. Transcripts may provide oblique evidence of such authorship, but if you really want to grasp it, you'll need the "script" behind the transcript. You'll need to learn how to close-read the code.

Reading a "Script" — The Drama of Procedure

As an appendix to his first journal article on ELIZA, Weizenbaum included the entire script for DOCTOR. This script (see Appendix) may strike the non-specialist reader as illegible — just a two-page list of chopped-off phrases, peppered at random with a mess of numbers and punctuation. Even studied more closely, DOCTOR looks accreted more than authored — and this impression isn't wrong. While the syntax of the script must be exact, its order is moot. After scripts are fed into ELIZA, their contents are randomized; then, each user-input is scanned for all keywords in the script, with one chosen based exclusively on its "RANK or precedence number."69 It's these numbers, not script-order, that structure the scene that appears in any transcript.

Since the order of the DOCTOR script is moot, it instead becomes evidence of Weizenbaum's process, which he describes in the following passage:

a given ELIZA script need not start out to be a large, full-blown scenario. On the contrary, it should begin as a quite modest set of keywords and transformation rules and be permitted to be grown and molded as experience with it builds up.70

For example, the first quarter or so of the DOCTOR script creates a basic conversational framework for therapy. It covers common topics (memories, dreams), prepares for typical rhetoric (apologies, conditional statements), and plans responses for when no actionable keyword appears. Then, as if Weizenbaum returned to the task after a disappointing round of beta testing, the script begins to tackle the kind of problems that might arise at the start of a conversation. What if users want to know DOCTOR's name? Or share their own? What if they write in a foreign language? What if, in response to DOCTOR's opening line ("HOW DO YOU DO. PLEASE TELL ME YOUR PROBLEM"), the user says something natural but unresponsive, like "hello"? Weizenbaum then adds a new topic (COMPUTER), assigning it by far the highest RANK of all keywords in the script — as if to deal with the fact that his users want to have meta-conversations with ELIZA about computing. Only then does he think about the middle of conversations, looking for ways to keep the dialogue afloat — e.g., devising rules for when the user's only keyword is a phrase like "I am" or "You are," "I can't" or "I don't," etc. Only in the script's final moments — that is, in the latest phase of DOCTOR's development — does Weizenbaum devise the rules that enable the opening lines of ELIZA's most famous transcript. In fact, the first two keywords the "distraught young lady" utters (ALIKE and ALWAYS) are the last two included in the DOCTOR script — and the first of these to be triggered carries one of the highest RANKs in the script, as though to ensure it will be triggered at the earliest moment. It's almost as though the whole point of the program was to produce precisely that transcript.

A script like this isn't a rigid conversational plan so much as a map of conversational potential. There is no guarantee that the conversation will begin or end in any particular way — and yet the rules are built and weighted so one outline is likely to appear. The dialogue makes you reflect on your statements. It asks you to toggle back and forth between specifics and generalizations. It prompts you to recognize resemblances or connections. And it causes you to reinterpret your own previous words in light of everything you've discussed. This is how you author a drama despite knowing little or nothing about its content: by authoring not a narrative, but a procedure.

You can see this same lesson applied in the "skill" that won the grand champion prize in the Kids category during the 2018 Amazon Alexa Challenge.71 Designed by yet another MFA-turned-VUI-designer, Adva Levin, this app is designed to manage harm and disagreement among kids. It does this not by understanding the details of who-hit-whom or who-did-what-first — when are those details ever useful? — but by walking them through the procedures of a search for justice. When activated, the app solicits evidence and witness testimony, appears to listen and respond, but it need not adapt much (or at all) to what it hears. Instead, it simply provides a structure in which grievance can be aired, evidence weighed, and (hopefully) justice achieved. In other words, it encodes in an app what the philosopher John Rawls calls "pure procedural justice" — the kind of justice practiced under the understanding that if procedures are followed fully and fairly, the result will be just.72 I'll let philosophers argue whether that's the vision of justice we want to teach our kids — just as I'll leave it to therapists to debate the merits of non-directive psychotherapy. What matters is that Levin, like Weizenbaum before her, found a scenario (a courtroom operating via pure procedural justice) that made the simplicity of her software disappear. Like ELIZA, Kids Court need not "interpret" a single word its users say — because the meaning inheres in the procedure.

With models of authorship like this, the proof is in the practice. That's why VUI designers spend a lot of time poring over data. Consider Mariana Lin — a journalist, essayist, and poet who for several years worked as the Creative Director of Siri. Lin began each day, she told me, with a database. It showed her which questions people were asking, what remarks they were making, and whether Siri knew how to respond. When Siri had no set response, Lin decided whether to set a team of human writers on the task. Wherever a response already existed, she could see whether it worked — or how exactly it went off the rails.73 You might think of her, then, as the creator of data-driven interactive theater — a scene for audience and device.

The VUI Avant Garde and Its Outposts in Industry

Before I met her, Lin was already thinking of her work this way. In fact, she first caught my attention as the author of a piece called "Absurdist Dialogues with Siri," published online by the Paris Review just weeks after she left her job at Apple. The essay starts with a beautiful account of the dialogue styles of Samuel Beckett and Adrienne Kennedy. ("Their characters bump and hiss against each other, each speaking from her own universe," Lin writes, "Conversation arises from echolalic accident rather than from actually listening to each other.")74 She then uses Beckett and Kennedy to show that Siri, too, has a distinct dramatic style — a style whose elements become obvious as soon as you make Siri speak in any other style, like Beckett's absurdism or Kennedy's surrealism. To prove her point, Lin ends the essay with this script for a conversation that, she says, "you could have with Siri today":

Human: I'm home.

Siri: Be it ever so humble ...

Human: Is love here?

Siri: Interesting question.

Human: I don't know what I'm looking for.

Siri: It's not a problem.

Human: Are you certain?

Siri: Well, I enjoy a good equivocal statement now and then.

Human: The storm is coming.

Siri: No, I don't think it's stormy right now.

Human: But it's in your mind.

Siri: Who, me?

Human: What do people ask you?

Siri: The universe is filled with strange and endless questions. And I get asked all of them.

Human: Bark like a dog.

Siri: Next you'll be asking me to make a noise like an orangutan.

Human: Meow.

Siri: Nice kitty.

Obviously, Siri wasn't built for this — but Lin is able to draw a Beckettian performance out of Siri because she knows how Siri works. She knows that the remark "I'm home" will elicit an interesting answer from Siri. (Ditto: "What do people ask you?" "Bark like a dog," and "Meow.") She knows that any remark about a storm will send Siri looking for the weather at her current location, but she also knows how to avoid this response — i.e., by substituting the ambiguous "it" for "storm." She knows how to invite Siri to stall for time (e.g., "Interesting question"), and how to trigger pensive replies, as when the keyword "certain" triggers "Well, I enjoy a good equivocal statement . . . " or when Lin's ambiguous "you"-statement calls forth the cheeky response, "Who, me?" In short, Lin isn't conversing with Siri here — she's playing Siri like a musical instrument. She knows the stops, and how to play them so a melody will emerge in the key of Samuel Beckett. (This is precisely what I suspect Weizenbaum did with ELIZA in the transcript supposedly featuring a "distraught young lady.")

But in Lin's case, this isn't cheating, exactly. It's a highly self-conscious version of what we do whenever we speak to a machine. Through trial and error, we learn what it can understand, and what it can't — which pathways lead somewhere, and which hit dead ends. Lin simply follows all paths toward Beckettian dialogue. This makes Siri sound odd because the prevailing VUI aesthetic is, in Lin's words, "transactional" — more to do with buying Kleenex than with waiting for Godot. This essay was her first act of freedom upon leaving Apple — but it was also, in a sense, a continuation of the work that she had done as Siri's Creative Director. It's the job of the artist in industry to open new areas for exploration. In "Absurdist Dialogues," dialogue style becomes a variable instead of a constant — as does the user behavior presumed (or instilled) by this style.

Theatermakers often engage in such forms of exploration and provocation. The UK-based theater and media company Blast Theory, for example, has made app-based work that similarly expands our sense of what might be possible in VUI design. Their 2014 project Karen, developed with the National Theatre Wales and released as a smartphone app, pretends to put you in touch with a life coach named Karen, who engages with you through video chat.75 Karen uses only the simplest forms of branching dialogue trees. (You answer by multiple-choice, sliding scale, or — in rare instances — typed text.) But despite this very basic technology, Karen provides a complex experience. Slowly, over the course of days or weeks, Karen gets nosier — and seems to know things she shouldn't. She overshares, asks uncomfortable questions, and tests the limits of acceptable therapeutic relations. Instead of a plot, in other words, Karen has a relationship — one that may feel familiar at first, but then goes weird. I hardly expect corporate VUI designers to focus on designing unsettling relationships, but like Lin's absurdist approach to Siri, Karen makes a variable out of something typically treated as a constant: the texture of the VUI-user relationship.

Industry, too, can be a place where new styles of VUI drama — not just new NLU or NLG algorithms — are developed. But that requires a certain attitude toward artists from their employers. At many tech companies, artists serve at the pleasure of software developers, who neither understand nor value the "soft skills" of their art. As one creative writer on the Google Assistant team explained, her job is to work within the tight constraints of a system designed by engineers for engineers.76 If she wants a new feature added, she has to convince them that it's worth their time. As a result, her artistry is mostly aimed not at reshaping the VUI, but at finding clever tricks for hiding its limitations. Then, once she has crafted a dialogue flow, it is reviewed by project managers and, if approved, hard coded into Google Assistant's back end. In a workflow this cumbersome, it may technically be possible for writers to test and experiment with their work, but it's unlikely to occur.

If this particular novelist-turned-VUI-designer ever chafed at Google Assistant's limitations or at Google's institutional culture, then it was due to her prior experience at one of the most artist-centered businesses in the industry. PullString (then called ToyTalk) was founded in May 2011 by a group of ex-Pixar executives with the goal of building intelligent, talking toys — in other words, of making Toy Story a reality. From the start, they hired artists to help them do this. Oren Jacobs, one of the founders, liked to say, "We believe conversational AI needs a personality, and personality cannot be algorithmically derived."77 So, screenwriters and children's book authors were among the earliest people PullString hired — and they were soon joined by theater people, too. In fact, this tiny startup hired at least four graduates of the American Conservatory Theater's prestigious MFA program in acting.78

Having assembled this cadre of MFA writers and actors, PullString then did something important: they put the artists in charge. The company built their own software development environment, which allowed creative teams to turn their mocked-up conversational flows directly into back-end code. Meanwhile, PullString encouraged their "creatives" to request new features from software engineers — anything that would help them achieve more engaging, dramatic work. Throughout the process, they would consciously draw on knowledge of theater for new tactics in VUI design. For example, one of them explained that his approach to "the unpredictable user" (a ubiquitous term of art in VUI design) was shaped by a UK-based work of experimental, immersive theater called You Me Bum Bum Train.79 This production, meant for a serial audience of one, sweeps spectators from one scenario to another, casting them each time in a new participant-role. For this actor-turned-VUI-designer, You Me was an archive of tactics for recruiting users into unfamiliar scenarios. Such cross-pollinations of corporate tech and avant-garde art were common at PullString, which hired multiple actors whose expertise was specifically in experimental theater. It was a "Golden Age," one former Pullstring designer sighed to me — just "weird kids playing," creating "something beautiful and sandboxy."80

By allowing their artists to become procedural authors, PullString also made them valuable to other corporations. The "PullString diaspora" (as they call it) has now dispersed across the VUI design sector. And they report fielding messages from halfway around the world requesting their services, or requesting introductions to other "PullString alums."81 Meanwhile, the people who recruited and graduated those "alums" have moved on to bigger things themselves: in February 2019, it was announced that Apple had acquired PullString, along with the services of its top executives.82 Given Apple's notorious secrecy, it's hard to tell what's afoot in Cupertino, but it's fair to assume that they are working to create a more capacious and flexible conversational platform for Apple's services.

I don't wish to deny the ugly feelings that attend on such corporate cooptation of artists and art.83 The MFAs-turned-VUI designers I've met share those feelings. They spoke to me about the "loss when society can't find ways to make living wages possible for artists" — and they expressed their unease at "mak[ing] people feel the humanity coming out of a device that is owned and operated by an enormous company interested in profit and user data." But even the artists who confessed such doubts also confessed other, utopian feelings. They believe that VUI design has changed their artistry for the better — has led to the "flowering of . . . [their] skills in one highly technical art form." They believe that there are new kinds of art to explore on the far side of VUI design. In fact, some of them have explored that realm already.

A couple of those actors-turned-PullString-designers helped to found a short-lived Brooklyn-based startup called Xandra, which tried to pioneer a new business model: paying people like them a solid salary to make technology and art. This "interactive production studio" split its time between app design for clients and speculative work on new approaches to interactive story-worlds. In other words, they would bankroll their experiments in a new hybrid art-form by using the skills and contacts they had acquired making apps in Silicon Valley. It was a clever way to combine tech's "creative revolution" with the art world's "occupational turn." As sociologist Alison Gerber explains, "increasing numbers of artists [are] making sense of their practice as a modern job" and perceive themselves less as producers of artworks for sale than as "hourly workers, providing as-yet-unacknowledged services."84 The artists at Xandra, filling their days with a blend of tech-industry service provision and artistic experimentation, held out hope that they could make all three things at once: money, technology, and art. The company folded, but not for reasons that impugn the soundness of this model. During the few years they existed, they created award-winning apps like Westworld: The Maze, which extended the transmedia storytelling of major entertainment studios into the realm of interactive VUI drama, and they racked up an impressive client list of blue-chip companies and media titans — all while also carving out time for their own artistic development.85

But it didn't last. These artists have cycled back into the Big Five, where they make technology and money, but not exactly art. For now, practicing art is just business as usual for them. It's what they do to get the job done, putting arts-knowledge to workaday use. This sort of story (Local Artist Meets Deadline, Earns Project Manager's Praise) is rarely told. Most people don't know that these stories exist; others do, but assume they will be boring or depressing — or both. I hope I've convinced you otherwise: not only that these stories are interesting, but that they tell us something about the arts we couldn't learn by focusing solely on the culture industries. But of course they're both emotionally and ideologically tricky to tell. Wouldn't you rather tell the tale of how Art the Outsider is putting Big Tech Behemoths to the sword? And yet these tales of derring-do, allegedly meant to cut against the industry's grain, square so nicely with its image of itself. "Disruption" has never felt so predictable; "making it new," has never felt so old as it does in Palo Alto today. Do you know what would feel revolutionary there? To cultivate something, root it in history, and sustain it into the future. To be able to say to every purveyor of conversational systems what artists know through long practice, and what the history of ELIZA teaches: that whenever art feels foreign to conversation design, the field has in fact become a stranger to itself.

Appendix: The Original DOCTOR Script

Christopher Grobe is associate professor and chair of English at Amherst College, where he is also the incoming director of the Center for Humanistic Inquiry.

References

- Kevin Moffett and Matthew Derby, Sandra, podcast audio, April 2018. [⤒]

- Andrew Liptak, "Kristen Wiig Voices a Human-Powered Virtual Assistant in Podcast Drama Sandra," The Verge, May 6, 2018. [⤒]

- Microsoft's chatbot Tay, built to learn by conversing with people online, offered an early cautionary tale: only a few hours after being released in 2016, it was tweeting white supremacist vitriol. Peter Lee, "Learning from Tay's Introduction," The Official Microsoft Blog, March 25, 2016. [⤒]

- A high-ranking employee at a Big Five company shared this information with me on condition of anonymity. [⤒]

- Mary L. Gray and Siddharth Suri, Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass (Boston: Houghton Mifflin Harcourt, 2019). [⤒]

- Sarah T Roberts, Behind the Screen: Content Moderation in the Shadows of Social Media (New Haven: Yale University Press, 2021). In contrast to companies' "continued opacity, obfuscation, and general unwillingness to discuss" the human role in content moderation, these same companies have spent years trying to call your attention to the work of conversation designers — in part, because they're hoping that you'll spend your own money and time contributing "actions" or "skills" to their platforms (25). [⤒]

- Cathy Pearl, Designing Voice User Interfaces: Principles of Conversational Experiences (Boston: O'Reilly, 2017), 9. [⤒]

- Cathy Pearl, interview by Christopher Grobe, Google Hangouts, October 16, 2019. [⤒]

- Joseph Weizenbaum, "ELIZA — a Computer Program for the Study of Natural Language Communication between Man and Machine," Communications of the ACM 9, no. 1 (January 1966): 36. [⤒]

- Annie Dorsen, "On Algorithmic Theater," Theater Magazine (blog), 2012. [⤒]

- Simone Natale, Deceitful Media: Artificial Intelligence and Social Life After the Turing Test (New York: Oxford University Press, 2021), 65. [⤒]

- Janet H. Murray, Inventing the Medium: Principles of Interaction Design as a Cultural Practice (Cambridge, MIT Press, 2011), 7. [⤒]

- Noah Wardrip-Fruin, Expressive Processing, Digital Fictions, Computer Games, and Software Studies (Cambridge: MIT Press, 2009), 25. [⤒]

- Caroline Bassett, "The Computational Therapeutic: Exploring Weizenbaum's ELIZA as a History of the Present," AI & Society (February 2018): 9; Jennifer Rhee, "Misidentification's Promise: The Turing Test in Weizenbaum, Powers, and Short," Postmodern Culture 20, no. 3 (May 2010): 5; Lawrence Switzky, "ELIZA Effects: Pygmalion and the Early Development of Artificial Intelligence," SHAW: The Journal of Bernard Shaw Studies 40, no. 1 (2020): 63. [⤒]

- Michele Elam, "Signs Taken for Wonders: AI, Art, and the Matter of Race," Daedalus 151, no. 2 (2022): 204. [⤒]

- A.M. Turing, "Computing Machinery and Intelligence," Mind 59, no. 236 (1950): 433-60. [⤒]

- Michael Scriven, "The Mechanical Concept of Mind," in Minds and Machines, ed. Alan Ross Anderson (Englewood Cliffs: Prentice Hall, 1964): 38. [⤒]

- Paul Ziff, "The Feelings of Robots," in Minds and Machines, 101. [⤒]

- A.M. Turing, Richard Braithwaite, Geoffrey Jefferson, and Max Newman, "Can Automatic Calculating Machines Be Said to Think?" in The Essential Turing, ed. B. Jack Copeland (Oxford: Clarendon Press, 2004): 503. [⤒]

- Ibid., 503. [⤒]

- See, e.g., Weizenbaum, Computer Power and Human Reason: From Judgement to Calculation (San Francisco: W.H. Freeman & Co, 1976), 18, 112-13, 115. [⤒]

- Weizenbaum, "ELIZA," 36. [⤒]

- Weizenbaum, Computer Power, 3, 189. [⤒]

- For a brilliant study of Weizenbaum's reading of Shaw, see: Switzky, "ELIZA Effects." [⤒]

- Weizenbaum, "Contextual Understanding by Computers," Communications of the ACM 10, no. 8 (August 1967): 479. [⤒]

- Ibid., 478. [⤒]

- Weizenbaum, Computer Power, 3. [⤒]

- I exclude Apple/Siri from this claim, as Apple doesn't allow others to contribute to their platform, the way Amazon does through Alexa "skills" and Google does through Google Assistant "actions." [⤒]

- Purbita Saha, "Amazon's Alexa Is Ready to Help You Learn Bird Calls," Audubon, November 7, 2017, [⤒]

- The systems are constantly changing. I would not expect to get precisely these responses in 2023. [⤒]

- Rene Ritchie, "Secret Siri Commands: Cool Questions You Can Ask RIght Now!" iMore, January 23, 2016"; Katie Teague, "10 Weirdest Things Alexa Can Do on Your Amazon Echo," CNET, September 20, 2020. [⤒]

- Pearl, Designing, 7. [⤒]

- Sachit Mishra "How We Designed It: The Google I/O '18 Action for the Google Assistant," Google Developers, September 16, 2018. [⤒]

- Pearl, Designing, 142. [⤒]

- Ibid., 143. [⤒]

- Pearl, interview. [⤒]

- For the history of this notion that there exists a great divide between scientific and humanistic/artistic "cultures," see: C.P. Snow, The Two Cultures (New York: Oxford University Press, 1959). [⤒]

- Pearl, Designing, xii [⤒]

- I take this phrase from the 1955 novel and 1956 film The Man in the Gray Flannel Suit, which captured the zeitgeist and made this phrase a ubiquitous epithet for anyone caught in the web of increased corporatization. [⤒]

- Allen Ginsberg, "Howl," in Collected Poems 1947-1997 (New York: Harper Collins, 2006),137. [⤒]

- W.D. Snodgrass, "A Cardinal," in Heart's Needle (New York: Knopf, 1959). [⤒]

- Marshall McLuhan, "Age of Advertising," Commonweal 58 (September 11, 1953): 557. [⤒]

- I have written more extensively about this midcentury moment in Christopher Grobe, "Advertisements for Themselves: Poetry, Confession, and the Arts of Publicity," in American Literature in Transition, 1950-1960, ed. Steven Belletto and Daniel Grausam (Cambridge: Cambridge University Press, 2017). [⤒]

- Lindsay Stein, "Where Have All the Creatives Gone?" Ad Age, March 21, 2016. The article begins with a nod to AMC's hit show Mad Men, which had recently aired its final seasons: "If Don Draper were working in today's ad business, there's a good chance his business card wouldn't read Sterling Cooper but instead Google, Apple, or Facebook." It's a telling example — not least because Don Draper himself leaves New York for California by the end of that show. In fact, the series finale finds him in the Bay Area in 1971, the same year this region acquired its new nickname: Silicon Valley. And it ends with the image of Draper meditating at a fictionalized version of The Esalen Institute, now the favored retreat of troubled tech executives. For a recent reflection on Mad Men and the "creative revolutions" of advertising and technology, see: Aaron Chandler, "Our Costume Dramas of Creative Destruction," Post45, March 22, 2023. [⤒]

- Shannon Steen, The Creativity Complex: Art, Tech, and the Seduction of an Idea, (Ann Arbor: University of Michigan Press, forthcoming), 12-13. [⤒]

- Fred Turner, "The Arts at Facebook: An Aesthetic Infrastructure for Surveillance Capitalism" Poetics 67 (April 2018), 59. [⤒]

- This narrative is widely repeated in the industry today. For a contemporaneous record of it forming, see the 2015 white paper by Andrew Brown of Strategy Analytics, "The Role of Voice in IOT Applications." [⤒]

- Sarah Brouillette, Literature and the Creative Economy (Stanford: Stanford University Press, 2014), 2. [⤒]

- "Sr (Conversational) Writer — Siri," job ad, Apple, March 25, 2020. [⤒]

- "Alexa Experience Writer," job ad, Amazon, 2020. [⤒]

- Mark McGurl, The Program Era: Postwar Fiction and the Rise of Creative Writing (Cambridge: Harvard University Press, 2011). [⤒]

- Elinor Fuchs, "EF's Visit to a Small Planet: Some Questions to Ask a Play," Theater 34, no. 2 (May 1, 2004): 6. [⤒]

- Weizenbaum, "ELIZA," 43. [⤒]

- For a capsule history of the word/concept "script" as it spread across these domains, see: Antonio Pizzo, Vincenzo Lombardo, and Rossana Damiano, "Algorithms and Interoperability between Drama and Artificial Intelligence," TDR/The Drama Review 63, no. 4 (November 20, 2019): 19. [⤒]

- Weizenbaum, "ELIZA," 44. [⤒]

- Janet H. Murray, Hamlet on the Holodeck: The Future of Narrative in Cyberspace (New York: The Free Press, 1997), 152. [⤒]

- Fuchs, "EF's Visit to a Small Planet," 9. [⤒]

- Weizenbaum, "ELIZA," 42. [⤒]

- Joseph Weizenbaum, "Conversations with a Mechanical Psychiatrist," The Harvard Review 111, no. 2 (1965): 68. [⤒]

- Nina Rastogi, interview by Christopher Grobe, in person, January 5, 2020. [⤒]

- Farah Houston, interview by Christopher Grobe, in person, January 10, 2020. The following narrative is based on Houston's account. [⤒]

- Matthew Chayes "Three Women Are the Wits behind Google Assistant," Newsday, July 1, 2018. [⤒]

- Diana Deibel and Rebecca Evanhoe, Conversations with Things: UX Design for Chat and Voice (New York: Rosenfield Media, 2021), 57. [⤒]

- Weizenbaum, "ELIZA," 41. [⤒]

- Ibid., 41. [⤒]

- All of the following quotations come from the transcript as he published it in 1965: Weizenbaum, "Conversations," 70-71. [⤒]